Um die Uhrzeit war ich gar nicht wach.

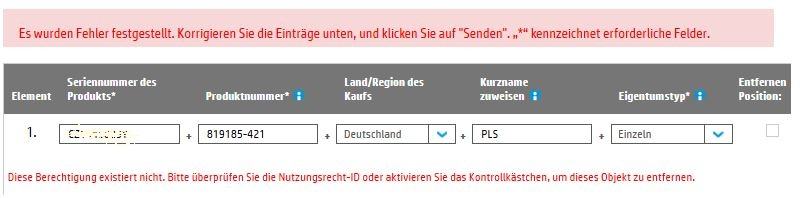

Bevor cissesrv Fehlermeldungen loggte geschahen die disk-Fehlermeldungen im Eventlog. Eigentlich würde ich erwarten dass der RAID-Controller zuerst und danach Fehlermeldungen beim Lesen/Schreiben von Windows geloggt würden.

Nachfolgend ein Bild der disk-Fehlermeldungen, die unmittelbar vor cissesrv geloggt wurden.

Anhang anzeigen 358671

Momentan tendiere ich dazu auch diese Toshiba-Platte (gelabelt als Intenso) wieder zurückzuschicken an Alternate.

Heute nacht werde ich mal einen Stresstest auf die andere WD-Platte durchführen, mal schauen ob ich da auch einen Rauswurf aus dem (Fake-)RAID provozieren kann (durch eine größere Kopieraktion, nichts schlimmes)

die SSD läuft aber einwandfrei, die hat auch ein einzelnes logisches RAID0-Laufwerk.

First of all, sorry that I write in English, my German is still under Deutschkurs phase.

I have the exact same problem starting on today morning 7:47. Bad blocks reported on Disk 1, at 13:41 on Disk 4 and on Disk 3. It can't be that 3 of the 4 hard drives has problems from one day to another.

Lots of blocks written like this:

The IO operation at logical block address 0x1f89fef8 for Disk 3 (PDO name: \Device\00000034) failed due to a hardware error.

Also this:

Disk 4 has been surprise removed.

In the morning I could re-activate disk with the HP MSgen8 restart, and one of my logical Windows server 2012 R2 managed volumes started the mirror resync, gen8 had blue lamp during. Then on the afternoon the server went to red flashing again, drives disappeared again, and it took also my 3rd HDD drive.

Since then, I cannot reactivate disks, even with server restarts.

The provider encountered an error while starting regeneration tasks for the volume's plexes. status=C038004B, Volume=UNKNOWN

Here are some pictures.

This is not normal, and I bet not the disks are faulty! 1 HDD out of 4 still working, now I'm copying data from it, I hope server won't mark that also as faulty.

I made this config (2x2TB WD Green, 2x3TB WD NAS, 1x EVO 850 in ODD bay) in a Raid-managed Raid-0 by HP Server software, and separated to NTFS Windows volumes in Windows Server 2012 R2 2 weeks ago.

Previously my drives were in my PC without any problems, tested them before and had no bad sectors, neither any issues. Server is behind APC UPS which is reliable for several years now. Besides Power outlets, LAN is also protected.

I've bought this ms gen8 for NAS and backup purposes, and now I nearly lost all my data because of the server somehow ruined the drives? This is unacceptable. I don't have any other backups, I definitely bought this for managing the backups with mirrored volumes.

I've also thought about this is a raid-controller problem, probably solved by the latest HP support package from April (which I haven't applied yet), but I am afraid if I apply it now, then somehow all the drives can be unrepairable/unreadable.

At some restarts 2, sometimes 3, now 4 drives out of the 5 drives are visible in Device Manager:

Basicly I am not a newbie, have and can solve lots of problems by my own, but now I have no idea what is really happening.

Any help would be very welcomed, I am desperately sad now.

How can I reactivate the drives to back up data from Simple and the Mirrored drives?

Many thanks who can solve this for me!